Robotic Collaboration through Scalable Reactive Synthesis

As human-robot collaboration is scaled up to more and more complex tasks, there is an increased need for formally modeling the system formed by human and robotic agents. Such modeling enables reasoning about reliability, safety, correctness, and scalability of the system. The modeling, however, presents a daunting task. This research aspires to formally model scenarios where the robot and the human can have varying roles. The intent is to develop scalable methodologies that will endow the robot with the ability to adapt to human actions and preferences without changes to its underlying software or hardware. An assembly scenario will be used to mimic manufacturing settings where a robot and a human may work together and where the actions of the robot can improve the quality and safety of the work of the human. The project is a critical step towards making robots collaborative with and responsive to humans while allowing the human to be in control. This research will develop a framework for human-robot collaboration that integrates reactive synthesis from formal methods with robotic planning methods. By tightly combining the development of synthesis methods with robotics, it will pursue the development of a framework that is intuitive and scalable. The focus is on task-level collaboration as opposed to physical interaction with a human. The framework takes as input a task specification defined in a novel formal language interpreted over finite traces: a language suitable for robotics problems. It produces a policy for a robotic agent to assist a human agent regardless of which subtask or execution order for this subtask that the human agent chooses. The policy includes both high-level actions for the robotic agent as well as corresponding low-level motions that can be directly executed by the actual robot. One key novel component of the approach is the automated construction of abstractions for robotic manipulation that can be used by synthesis methods. The scalability of the proposed work will be investigated along different dimensions: the extent to which symbolic reasoning can be applied, the development of new synthesis algorithms, and the proper use of abstractions including their automatic refinement and the construction of factored abstractions. The trade-offs in using a combination of partial policies and replanning will be investigated as well as how to account for incomplete information due to incomplete observations. The theoretical contributions will be implemented on real robot hardware and demonstrated in experiments that are analogous to real-world assembly tasks.

This work has been supported by grant NSF NRI 1830549.

Related Publications

- T. Pan, R. Shome, and L. E. Kavraki, “Task and Motion Planning for Execution in the Real,” IEEE Transactions on Robotics, pp. 1–16, 2024. Honorable Mention, 2024 IEEE Transactions on Robotics (T-RO) King-Sun Fu Memorial Best

Paper Award.

BibTeX

@article{pan2024-tamper, author = {Pan, Tianyang and Shome, Rahul and Kavraki, Lydia E.}, journal = {IEEE Transactions on Robotics}, title = {Task and Motion Planning for Execution in the Real}, year = {2024}, volume = {}, number = {}, pages = {1-16}, doi = {10.1109/TRO.2024.3418550}, note = {Honorable Mention, 2024 IEEE Transactions on Robotics (T-RO) King-Sun Fu Memorial Best Paper Award.}, abstract = {Task and motion planning represents a powerful set of hybrid planning methods that combine reasoning over discrete task domains and continuous motion generation. Traditional reasoning necessitates task domain models and enough information to ground actions to motion planning queries. Gaps in this knowledge often arise from sources like occlusion or imprecise modeling. This work generates task and motion plans that include actions cannot be fully grounded at planning time. During execution, such an action is handled by a provided human-designed or learned closed-loop behavior. Execution combines offline planned motions and online behaviors till reaching the task goal. Failures of behaviors are fed back as constraints to find new plans. Forty real-robot trials and motivating demonstrations are performed to evaluate the proposed framework and compare against state-of-the-art. Results show faster execution time, less number of actions, and more success in problems where diverse gaps arise. The experiment data is shared for researchers to simulate these settings. The work shows promise in expanding the applicable class of realistic partially grounded problems that robots can address.} }DetailsAbstract

Task and motion planning represents a powerful set of hybrid planning methods that combine reasoning over discrete task domains and continuous motion generation. Traditional reasoning necessitates task domain models and enough information to ground actions to motion planning queries. Gaps in this knowledge often arise from sources like occlusion or imprecise modeling. This work generates task and motion plans that include actions cannot be fully grounded at planning time. During execution, such an action is handled by a provided human-designed or learned closed-loop behavior. Execution combines offline planned motions and online behaviors till reaching the task goal. Failures of behaviors are fed back as constraints to find new plans. Forty real-robot trials and motivating demonstrations are performed to evaluate the proposed framework and compare against state-of-the-art. Results show faster execution time, less number of actions, and more success in problems where diverse gaps arise. The experiment data is shared for researchers to simulate these settings. The work shows promise in expanding the applicable class of realistic partially grounded problems that robots can address. - K. Muvvala, A. M. Wells, M. Lahijanian, L. E. Kavraki, and M. Y. Vardi, “Stochastic Games for Interactive Manipulation Domains,” in IEEE International Conference on Robotics and Automation (ICRA), 2024, pp. 2513–2519.

BibTeX

@inproceedings{muvvala2024games, author = {Muvvala, Karan and Wells, Andrew M. and Lahijanian, Morteza and Kavraki, Lydia E. and Vardi, Moshe Y.}, title = {Stochastic Games for Interactive Manipulation Domains}, year = {2024}, booktitle = {IEEE International Conference on Robotics and Automation (ICRA)}, abstract = {As robots become more prevalent, the complexity of robot-robot, robot-human, and robot-environment interactions increases. In these interactions, a robot needs to consider not only the effects of its own actions, but also the effects of other agents’ actions and the possible interactions between agents. Previous works have considered reactive synthesis, where the human/environment is modeled as a deterministic, adversarial agent; as well as probabilistic synthesis, where the human/environment is modeled via a Markov chain. While they provide strong theoretical frameworks, there are still many aspects of human-robot interaction that cannot be fully expressed and many assumptions that must be made in each model. In this work, we propose stochastic games as a general model for human-robot interaction, which subsumes the expressivity of all previous representations. In addition, it allows us to make fewer modeling assumptions and leads to more natural and powerful models of interaction. We introduce the semantics of this abstraction and show how existing tools can be utilized to synthesize strategies to achieve complex tasks with guarantees. Further, we discuss the current computational limitations and improve the scalability by two orders of magnitude by a new way of constructing models for PRISM-games.}, doi = {10.1109/ICRA57147.2024.10611623}, pages = {2513--2519}, url = {https://ieeexplore.ieee.org/document/10611623}, keywords = {Computational modeling,Scalability,Semantics,Stochastic processes,Human-robot interaction,Games,Probabilistic logic} }DetailsAbstract

As robots become more prevalent, the complexity of robot-robot, robot-human, and robot-environment interactions increases. In these interactions, a robot needs to consider not only the effects of its own actions, but also the effects of other agents’ actions and the possible interactions between agents. Previous works have considered reactive synthesis, where the human/environment is modeled as a deterministic, adversarial agent; as well as probabilistic synthesis, where the human/environment is modeled via a Markov chain. While they provide strong theoretical frameworks, there are still many aspects of human-robot interaction that cannot be fully expressed and many assumptions that must be made in each model. In this work, we propose stochastic games as a general model for human-robot interaction, which subsumes the expressivity of all previous representations. In addition, it allows us to make fewer modeling assumptions and leads to more natural and powerful models of interaction. We introduce the semantics of this abstraction and show how existing tools can be utilized to synthesize strategies to achieve complex tasks with guarantees. Further, we discuss the current computational limitations and improve the scalability by two orders of magnitude by a new way of constructing models for PRISM-games. - K. Elimelech, Z. Kingston, W. Thomason, M. Y. Vardi, and L. E. Kavraki, “Accelerating long-horizon planning with affordance-directed dynamic grounding of

abstract skills,” in IEEE International Conference on Robotics and Automation (ICRA), 2024, pp. 12688–12695.

BibTeX

@inproceedings{elimelech2024skills, author = {Elimelech, Khen and Kingston, Zachary and Thomason, Wil and Vardi, Moshe Y. and Kavraki, Lydia E.}, title = {Accelerating long-horizon planning with affordance-directed dynamic grounding of abstract skills}, year = {2024}, booktitle = {IEEE International Conference on Robotics and Automation (ICRA)}, abstract = {Long-horizon task planning is important for robot autonomy, especially as a subroutine for frameworks such as Integrated Task and Motion Planning. However, task planning is computationally challenging and struggles to scale to realistic problem settings. We propose to accelerate task planning over an agent's lifetime by integrating abstract strategies: a generalizable planning experience encoding introduced in earlier work. In this work, we contribute a practical approach to planning with strategies by introducing a novel formalism of planning in a strategy-augmented domain. We also introduce and formulate the notion of a strategy's affordance, which indicates its predicted benefit to the solution, and use it to guide the planning and strategy grounding processes. Together, our observations yield an affordance-directed, lazy-search planning algorithm, which can seamlessly compose strategies and actions to solve long-horizon planning problems. We evaluate our planner in an object rearrangement domain, where we demonstrate performance benefits relative to a state-of-the-art task planner.}, pages = {12688--12695}, doi = {10.1109/ICRA57147.2024.10610486}, url = {https://ieeexplore.ieee.org/document/10610486} }DetailsAbstract

Long-horizon task planning is important for robot autonomy, especially as a subroutine for frameworks such as Integrated Task and Motion Planning. However, task planning is computationally challenging and struggles to scale to realistic problem settings. We propose to accelerate task planning over an agent’s lifetime by integrating abstract strategies: a generalizable planning experience encoding introduced in earlier work. In this work, we contribute a practical approach to planning with strategies by introducing a novel formalism of planning in a strategy-augmented domain. We also introduce and formulate the notion of a strategy’s affordance, which indicates its predicted benefit to the solution, and use it to guide the planning and strategy grounding processes. Together, our observations yield an affordance-directed, lazy-search planning algorithm, which can seamlessly compose strategies and actions to solve long-horizon planning problems. We evaluate our planner in an object rearrangement domain, where we demonstrate performance benefits relative to a state-of-the-art task planner. - K. Elimelech, L. E. Kavraki, and M. Y. Vardi, “Extracting generalizable skills from a single plan execution using abstraction-critical

state detection,” in 2023 International Conference on Robotics and Automation (ICRA), 2023, pp. 5772–5778.

BibTeX

@inproceedings{elimelech2023-extract-skills, title = {Extracting generalizable skills from a single plan execution using abstraction-critical state detection}, author = {Elimelech, Khen and Kavraki, Lydia E. and Vardi, Moshe Y.}, booktitle = {2023 International Conference on Robotics and Automation (ICRA)}, year = {2023}, pages = {5772--5778}, doi = {10.1109/ICRA48891.2023.10161270}, month = may, abstract = {Robotic task planning is computationally challenging. To reduce planning cost and support life-long operation, we must leverage prior planning experience. To this end, we address the problem of extracting reusable and generalizable abstract skills from successful plan executions. In previous work, we introduced a supporting framework, allowing us, theoretically, to extract an abstract skill from a single execution and later automatically adapt it and reuse it in new domains. We also proved that, given a library of such skills, we can significantly reduce the planning effort for new problems. Nevertheless, until now, abstract-skill extraction could only be performed manually. In this paper, we finally close the automation loop and explain how abstract skills can be practically and automatically extracted. We start by analyzing the desired qualities of an abstract skill and formulate skill extraction as an optimization problem. We then develop two extraction algorithms, based on the novel concept of abstraction-critical state detection. As we show experimentally, the approach is independent of any planning domain.} }DetailsAbstract

Robotic task planning is computationally challenging. To reduce planning cost and support life-long operation, we must leverage prior planning experience. To this end, we address the problem of extracting reusable and generalizable abstract skills from successful plan executions. In previous work, we introduced a supporting framework, allowing us, theoretically, to extract an abstract skill from a single execution and later automatically adapt it and reuse it in new domains. We also proved that, given a library of such skills, we can significantly reduce the planning effort for new problems. Nevertheless, until now, abstract-skill extraction could only be performed manually. In this paper, we finally close the automation loop and explain how abstract skills can be practically and automatically extracted. We start by analyzing the desired qualities of an abstract skill and formulate skill extraction as an optimization problem. We then develop two extraction algorithms, based on the novel concept of abstraction-critical state detection. As we show experimentally, the approach is independent of any planning domain. - K. Elimelech, L. E. Kavraki, and M. Y. Vardi, “Automatic Cross-domain Task Plan Transfer by Caching Abstract Skills,” in Algorithmic Foundations of Robotics XV, Cham, 2023, pp. 470–487. Appeared at Workshop on the Algorithmic Foundations of Robotics (WAFR) 2022.

BibTeX

@inproceedings{elimelech2022-wafr-skills, author = {Elimelech, Khen and Kavraki, Lydia E. and Vardi, Moshe Y.}, main_auth = {1}, editor = {LaValle, Steven M. and O'Kane, Jason M. and Otte, Michael and Sadigh, Dorsa and Tokekar, Pratap}, title = {Automatic Cross-domain Task Plan Transfer by Caching Abstract Skills}, booktitle = {Algorithmic Foundations of Robotics XV}, note = {Appeared at Workshop on the Algorithmic Foundations of Robotics (WAFR) 2022.}, pages = {470-487}, series = {Springer Proceedings in Advanced Robotics (SPAR)}, publisher = {Springer International Publishing}, doi = {10.1007/978-3-031-21090-7_28}, year = {2023}, abstract = {Solving realistic robotic task planning problems is computationally demanding. To better exploit the planning effort and reduce the future planning cost, it is important to increase the reusability of successful plans. To this end, we suggest a systematic and automatable approach for plan transfer, by rethinking the plan caching procedure. Specifically, instead of caching successful plans in their original domain, we suggest transferring them upon discovery to a dynamically-defined abstract domain and cache them as ``abstract skills'' there. This technique allows us to maintain a unified, standardized, and compact skill database, to avoid skill redundancy, and to support lifelong operation. Cached skills can later be reconstructed into new domains on demand, and be applied to new tasks, with no human intervention. This is made possible thanks to the novel concept of ``abstraction keys.'' An abstraction key, when coupled with a skill, provides all the necessary information to cache it, reconstruct it, and transfer it across all domains in which it is applicable---even domains we have yet to encounter. We practically demonstrate the approach by providing two examples of such keys and explain how they can be used in a manipulation planning domain.}, address = {Cham}, isbn = {978-3-031-21090-7} }DetailsAbstract

Solving realistic robotic task planning problems is computationally demanding. To better exploit the planning effort and reduce the future planning cost, it is important to increase the reusability of successful plans. To this end, we suggest a systematic and automatable approach for plan transfer, by rethinking the plan caching procedure. Specifically, instead of caching successful plans in their original domain, we suggest transferring them upon discovery to a dynamically-defined abstract domain and cache them as “abstract skills” there. This technique allows us to maintain a unified, standardized, and compact skill database, to avoid skill redundancy, and to support lifelong operation. Cached skills can later be reconstructed into new domains on demand, and be applied to new tasks, with no human intervention. This is made possible thanks to the novel concept of “abstraction keys.” An abstraction key, when coupled with a skill, provides all the necessary information to cache it, reconstruct it, and transfer it across all domains in which it is applicable—even domains we have yet to encounter. We practically demonstrate the approach by providing two examples of such keys and explain how they can be used in a manipulation planning domain. - K. Elimelech, L. E. Kavraki, and M. Y. Vardi, “Efficient task planning using abstract skills and dynamic road map matching,” in Robotics Research, Cham, 2023, pp. 487–503. Appeared at International Symposium of Robotics Research (ISRR) 2022.

BibTeX

@inproceedings{elimelech2022-isrr-skills, author = {Elimelech, Khen and Kavraki, Lydia E. and Vardi, Moshe Y.}, main_auth = {1}, editor = {Billard, Aude and Asfour, Tamim and Khatib, Oussama}, title = {Efficient task planning using abstract skills and dynamic road map matching}, booktitle = {Robotics Research}, note = {Appeared at International Symposium of Robotics Research (ISRR) 2022.}, doi = {10.1007/978-3-031-25555-7_33}, pages = {487--503}, year = {2023}, abstract = {Task planning is the problem of finding a discrete sequence of actions to achieve a goal. Unfortunately, task planning in robotic domains is computationally challenging. To address this, in our prior work, we explained how knowledge from a successful task solution can be cached for later use, as an ``abstract skill." Such a skill is represented as a trace of states (``road map") in an abstract space and can be matched with new tasks on-demand. This paper explains how one can use a library of abstract skills, derived from past planning experience, to reduce the computational cost of solving new task planning problems. As we explain, matching a skill to a task allows us to decompose it into independent sub-tasks, which can be quickly solved in parallel. This can be done automatically and dynamically during planning. We begin by formulating this problem of ``planning with skills" as a constraint satisfaction problem. We then provide a hierarchical solution algorithm, which integrates with any standard task planner. Finally, we experimentally demonstrate the computational benefits of the approach for reach-avoid tasks.}, publisher = {Springer Nature Switzerland}, address = {Cham}, isbn = {978-3-031-25555-7} }DetailsAbstract

Task planning is the problem of finding a discrete sequence of actions to achieve a goal. Unfortunately, task planning in robotic domains is computationally challenging. To address this, in our prior work, we explained how knowledge from a successful task solution can be cached for later use, as an “abstract skill." Such a skill is represented as a trace of states (“road map") in an abstract space and can be matched with new tasks on-demand. This paper explains how one can use a library of abstract skills, derived from past planning experience, to reduce the computational cost of solving new task planning problems. As we explain, matching a skill to a task allows us to decompose it into independent sub-tasks, which can be quickly solved in parallel. This can be done automatically and dynamically during planning. We begin by formulating this problem of “planning with skills" as a constraint satisfaction problem. We then provide a hierarchical solution algorithm, which integrates with any standard task planner. Finally, we experimentally demonstrate the computational benefits of the approach for reach-avoid tasks. - S. Bansal, L. E. Kavraki, M. V. Vardi, and A. Wells, “Synthesis from Satisficing and Temporal Goals,” in Proceedings of the AAAI Conference on Artifical Intelligence, 2022, vol. 36, no. 9, pp. 9679–9686.

BibTeX

@inproceedings{bansal2022-synthesis, title = {Synthesis from Satisficing and Temporal Goals}, author = {Bansal, Suguman and Kavraki, Lydia E. and Vardi, Moshe V. and Wells, Andrew}, booktitle = {Proceedings of the AAAI Conference on Artifical Intelligence}, month = jun, year = {2022}, volume = {36}, doi = {10.1609/aaai.v36i9.21202}, pages = {9679--9686}, number = {9}, abstract = {Reactive synthesis from high-level specifications that combine hard constraints expressed in Linear Temporal Logic (LTL) with soft constraints expressed by discounted-sum (DS) rewards has applications in planning and reinforcement learning. An existing approach combines techniques from LTL synthesis with optimization for the DS rewards but has failed to yield a sound algorithm. An alternative approach combining LTL synthesis with satisficing DS rewards (rewards that achieve a threshold) is sound and complete for integer discount factors, but, in practice, a fractional discount factor is desired. This work extends the existing satisficing approach, presenting the first sound algorithm for synthesis from LTL and DS rewards with fractional discount factors. The utility of our algorithm is demonstrated on robotic planning domains.}, keyword = {planning from high-level specifications}, publisher = {AAAI} }DetailsAbstract

Reactive synthesis from high-level specifications that combine hard constraints expressed in Linear Temporal Logic (LTL) with soft constraints expressed by discounted-sum (DS) rewards has applications in planning and reinforcement learning. An existing approach combines techniques from LTL synthesis with optimization for the DS rewards but has failed to yield a sound algorithm. An alternative approach combining LTL synthesis with satisficing DS rewards (rewards that achieve a threshold) is sound and complete for integer discount factors, but, in practice, a fractional discount factor is desired. This work extends the existing satisficing approach, presenting the first sound algorithm for synthesis from LTL and DS rewards with fractional discount factors. The utility of our algorithm is demonstrated on robotic planning domains. - T. Pan, A. M. Wells, R. Shome, and L. E. Kavraki, “Failure is an option: Task and Motion Planning with Failing Executions,” in 2022 International Conference on Robotics and Automation (ICRA), 2022, pp. 1947–1953.

BibTeX

@inproceedings{pan2022failing-execution, title = {Failure is an option: Task and Motion Planning with Failing Executions}, author = {Pan, Tianyang and Wells, Andrew M. and Shome, Rahul and Kavraki, Lydia E.}, booktitle = {2022 International Conference on Robotics and Automation (ICRA)}, month = may, year = {2022}, pages = {1947--1953}, doi = {10.1109/ICRA46639.2022.9812273}, abstract = {Future robotic deployments will require robots to be able to repeatedly solve a variety of tasks in application domains. Task and motion planning addresses complex robotic problems that combine discrete reasoning over states and actions and geometric interactions during action executions. Moving beyond deterministic settings, stochastic actions can be handled by modeling the problem as a Markov Decision Process. The underlying probabilities however are typically hard to model since failures might be caused by hardware imperfections, sensing noise, or physical interactions. We propose a framework to address a task and motion planning setting where actions can fail during execution. To achieve a task goal actions need to be computed and executed despite failures. The robot has to infer which actions are robust and for each new problem effectively choose a solution that reduces expected execution failures. The key idea is to continually recover and refine the underlying beliefs associated with actions across multiple different problems in the domain. Our proposed method can find solutions that reduce the expected number of discrete, executed actions. Results in physics-based simulation indicate that our method outperforms baseline replanning strategies to deal with failing executions}, keyword = {task and motion planning}, publisher = {IEEE} }DetailsAbstract

Future robotic deployments will require robots to be able to repeatedly solve a variety of tasks in application domains. Task and motion planning addresses complex robotic problems that combine discrete reasoning over states and actions and geometric interactions during action executions. Moving beyond deterministic settings, stochastic actions can be handled by modeling the problem as a Markov Decision Process. The underlying probabilities however are typically hard to model since failures might be caused by hardware imperfections, sensing noise, or physical interactions. We propose a framework to address a task and motion planning setting where actions can fail during execution. To achieve a task goal actions need to be computed and executed despite failures. The robot has to infer which actions are robust and for each new problem effectively choose a solution that reduces expected execution failures. The key idea is to continually recover and refine the underlying beliefs associated with actions across multiple different problems in the domain. Our proposed method can find solutions that reduce the expected number of discrete, executed actions. Results in physics-based simulation indicate that our method outperforms baseline replanning strategies to deal with failing executions - A. M. Wells, Z. Kingston, M. Lahijanian, L. E. Kavraki, and M. Y. Vardi, “Finite-Horizon Synthesis for Probabilistic Manipulation Domains,” in Proceedings of the IEEE International Conference on Robotics and Automation, 2021, pp. 6336–6342.

BibTeX

@inproceedings{wells2021-finite-horizon-synthesis, title = {{Finite-Horizon Synthesis for Probabilistic Manipulation Domains}}, author = {Wells, Andrew M. and Kingston, Zachary and Lahijanian, Morteza and Kavraki, Lydia E. and Vardi, Moshe Y.}, booktitle = {Proceedings of the {IEEE} International Conference on Robotics and Automation}, month = jun, year = {2021}, pages = {6336--6342}, doi = {10.1109/ICRA48506.2021.9561297}, abstract = {Robots have begun operating and collaborating with humans in industrial and social settings. This collaboration introduces challenges: the robot must plan while taking the human’s actions into account. In prior work, the problem was posed as a 2-player deterministic game, with a limited number of human moves. The limit on human moves is unintuitive, and in many settings determinism is undesirable. In this paper, we present a novel planning method for collaborative human-robot manipulation tasks via probabilistic synthesis. We introduce a probabilistic manipulation domain that captures the interaction by allowing for both robot and human actions with states that represent the configurations of the objects in the workspace. The task is specified using Linear Temporal Logic over finite traces (LTLf ). We then transform our manipulation domain into a Markov Decision Process (MDP) and synthesize an optimal policy to satisfy the specification on this MDP. We present two novel contributions: a formalization of probabilistic manipulation domains allowing us to apply existing techniques and a comparison of different encodings of these domains. Our framework is validated on a physical UR5 robot.}, keyword = {Synthesis, Probabilistic Systems} }DetailsAbstract

Robots have begun operating and collaborating with humans in industrial and social settings. This collaboration introduces challenges: the robot must plan while taking the human’s actions into account. In prior work, the problem was posed as a 2-player deterministic game, with a limited number of human moves. The limit on human moves is unintuitive, and in many settings determinism is undesirable. In this paper, we present a novel planning method for collaborative human-robot manipulation tasks via probabilistic synthesis. We introduce a probabilistic manipulation domain that captures the interaction by allowing for both robot and human actions with states that represent the configurations of the objects in the workspace. The task is specified using Linear Temporal Logic over finite traces (LTLf ). We then transform our manipulation domain into a Markov Decision Process (MDP) and synthesize an optimal policy to satisfy the specification on this MDP. We present two novel contributions: a formalization of probabilistic manipulation domains allowing us to apply existing techniques and a comparison of different encodings of these domains. Our framework is validated on a physical UR5 robot. - T. Pan, A. M. Wells, R. Shome, and L. E. Kavraki, “A General Task and Motion Planning Framework For Multiple Manipulators,” in IEEE/RSJ International Conference on Intelligent Robots and Systems, 2021, pp. 3168–3174.

BibTeX

@inproceedings{pan2021multiple_manipulators, title = {A General Task and Motion Planning Framework For Multiple Manipulators}, author = {Pan, Tianyang and Wells, Andrew M. and Shome, Rahul and Kavraki, Lydia E.}, booktitle = {{IEEE/RSJ} International Conference on Intelligent Robots and Systems}, year = {2021}, pages = {3168--3174}, doi = {10.1109/IROS51168.2021.9636119}, abstract = {Many manipulation tasks combine high-level discrete planning over actions with low-level motion planning over continuous robot motions. Task and motion planning (TMP) provides a powerful general framework to combine discrete and geometric reasoning, and solvers have been previously proposed for single-robot problems. Multi-robot TMP expands the range of TMP problems that can be solved but poses significant challenges when considering scalability and solution quality. We present a general TMP framework designed for multiple robotic manipulators. This is based on two contributions. First, we propose an optimal task planner designed to support simultaneous discrete actions. Second, we introduce an intermediate scheduler layer between task planner and motion planner to evaluate alternate robot assignments to these actions. This aggressively explores the search space and typically reduces the number of expensive task planning calls. Several benchmarks with a rich set of actions for two manipulators are evaluated. We show promising results in scalability and solution quality of our TMP framework with the scheduler for up to six objects. A demonstration indicates scalability to up to five robots.}, keyword = {task and motion planning, multi-robot systems} }DetailsAbstract

Many manipulation tasks combine high-level discrete planning over actions with low-level motion planning over continuous robot motions. Task and motion planning (TMP) provides a powerful general framework to combine discrete and geometric reasoning, and solvers have been previously proposed for single-robot problems. Multi-robot TMP expands the range of TMP problems that can be solved but poses significant challenges when considering scalability and solution quality. We present a general TMP framework designed for multiple robotic manipulators. This is based on two contributions. First, we propose an optimal task planner designed to support simultaneous discrete actions. Second, we introduce an intermediate scheduler layer between task planner and motion planner to evaluate alternate robot assignments to these actions. This aggressively explores the search space and typically reduces the number of expensive task planning calls. Several benchmarks with a rich set of actions for two manipulators are evaluated. We show promising results in scalability and solution quality of our TMP framework with the scheduler for up to six objects. A demonstration indicates scalability to up to five robots. - A. M. Wells, M. Lahijanian, L. E. Kavraki, and M. Y. Vardi, “LTLf Synthesis on Probabilistic Systems,” Electronic Proceedings in Theoretical Computer Science, vol. 326, pp. 166–181, Sep. 2020.

BibTeX

@article{wells2020-ltlf, title = {LTLf Synthesis on Probabilistic Systems}, author = {Wells, Andrew M. and Lahijanian, Morteza and Kavraki, Lydia E. and Vardi, Moshe Y.}, journal = {Electronic Proceedings in Theoretical Computer Science}, month = sep, year = {2020}, volume = {326}, pages = {166--181}, doi = {10.4204/eptcs.326.11}, abstract = {Many systems are naturally modeled as Markov Decision Processes (MDPs), combining probabilities and strategic actions. Given a model of a system as an MDP and some logical specification of system behavior, the goal of synthesis is to find a policy that maximizes the probability of achieving this behavior. A popular choice for defining behaviors is Linear Temporal Logic (LTL). Policy synthesis on MDPs for properties specified in LTL has been well studied. LTL, however, is defined over infinite traces, while many properties of interest are inherently finite. Linear Temporal Logic over finite traces (LTLf) has been used to express such properties, but no tools exist to solve policy synthesis for MDP behaviors given finite-trace properties. We present two algorithms for solving this synthesis problem: the first via reduction of LTLf to LTL and the second using native tools for LTLf. We compare the scalability of these two approaches for synthesis and show that the native approach offers better scalability compared to existing automaton generation tools for LTL.}, issn = {2075-2180}, keyword = {planning from high-level specifications, uncertainty}, publisher = {Open Publishing Association}, url = {http://dx.doi.org/10.4204/EPTCS.326.11} }DetailsAbstract

Many systems are naturally modeled as Markov Decision Processes (MDPs), combining probabilities and strategic actions. Given a model of a system as an MDP and some logical specification of system behavior, the goal of synthesis is to find a policy that maximizes the probability of achieving this behavior. A popular choice for defining behaviors is Linear Temporal Logic (LTL). Policy synthesis on MDPs for properties specified in LTL has been well studied. LTL, however, is defined over infinite traces, while many properties of interest are inherently finite. Linear Temporal Logic over finite traces (LTLf) has been used to express such properties, but no tools exist to solve policy synthesis for MDP behaviors given finite-trace properties. We present two algorithms for solving this synthesis problem: the first via reduction of LTLf to LTL and the second using native tools for LTLf. We compare the scalability of these two approaches for synthesis and show that the native approach offers better scalability compared to existing automaton generation tools for LTL. - J. D. Hernández, S. Sobti, A. Sciola, M. Moll, and L. E. Kavraki, “Increasing Robot Autonomy via Motion Planning and an Augmented Reality Interface,” IEEE Robotics and Automation Letters, vol. 5, no. 2, pp. 1017–1023, Apr. 2020.

BibTeX

@article{hernandez2020increasing-robot-autonomy-via-motion, title = {Increasing Robot Autonomy via Motion Planning and an Augmented Reality Interface}, author = {Hern{\'a}ndez, Juan David and Sobti, Shlok and Sciola, Anthony and Moll, Mark and Kavraki, Lydia E.}, journal = {IEEE Robotics and Automation Letters}, month = apr, year = {2020}, volume = {5}, number = {2}, pages = {1017--1023}, doi = {10.1109/LRA.2020.2967280}, abstract = {Recently, there has been a growing interest in robotic systems that are able to share workspaces and collaborate with humans. Such collaborative scenarios require efficient mechanisms to communicate human requests to a robot, as well as to transmit robot interpretations and intents to humans. Recent advances in augmented reality (AR) technologies have provided an alternative for such communication. Nonetheless, most of the existing work in human-robot interaction with AR devices is still limited to robot motion programming or teleoperation. In this paper, we present an alternative approach to command and collaborate with robots. Our approach uses an AR interface that allows a user to specify high-level requests to a robot, to preview, approve or modify the computed robot motions. The proposed approach exploits the robot's decisionmaking capabilities instead of requiring low-level motion specifications provided by the user. The latter is achieved by using a motion planner that can deal with high-level goals corresponding to regions in the robot configuration space. We present a proof of concept to validate our approach in different test scenarios, and we present a discussion of its applicability in collaborative environments.} }DetailsAbstract

Recently, there has been a growing interest in robotic systems that are able to share workspaces and collaborate with humans. Such collaborative scenarios require efficient mechanisms to communicate human requests to a robot, as well as to transmit robot interpretations and intents to humans. Recent advances in augmented reality (AR) technologies have provided an alternative for such communication. Nonetheless, most of the existing work in human-robot interaction with AR devices is still limited to robot motion programming or teleoperation. In this paper, we present an alternative approach to command and collaborate with robots. Our approach uses an AR interface that allows a user to specify high-level requests to a robot, to preview, approve or modify the computed robot motions. The proposed approach exploits the robot’s decisionmaking capabilities instead of requiring low-level motion specifications provided by the user. The latter is achieved by using a motion planner that can deal with high-level goals corresponding to regions in the robot configuration space. We present a proof of concept to validate our approach in different test scenarios, and we present a discussion of its applicability in collaborative environments. - T. Pan, C. K. Verginis, A. M. Wells, L. E. Kavraki, and D. V. Dimarogonas, “Augmenting Control Policies with Motion Planning for Robust and Safe Multi-robot

Navigation,” 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 6975–6981, 2020.

BibTeX

@article{pan2020augmenting-control-policies, title = {Augmenting Control Policies with Motion Planning for Robust and Safe Multi-robot Navigation}, author = {Pan, Tianyang and Verginis, Christos K. and Wells, Andrew M. and Kavraki, Lydia E. and Dimarogonas, Dimos V.}, booktitle = {2020 IEEE/RSJ International Conference on Intelligent Robots and Systems}, year = {2020}, pages = {6975--6981}, doi = {10.1109/IROS45743.2020.9341153}, abstract = {This work proposes a novel method of incorporating calls to a motion planner inside a potential field control policy for safe multi-robot navigation with uncertain dynamics. The proposed framework can handle more general scenes than the control policy and has low computational costs. Our work is robust to uncertain dynamics and quickly finds high-quality paths in scenarios generated from real-world floor plans. In the proposed approach, we attempt to follow the control policy as much as possible, and use calls to the motion planner to escape local minima. Trajectories returned from the motion planner are followed using a path-following controller guaranteeing robustness. We demonstrate the utility of our approach with experiments based on floor plans gathered from real buildings.}, keyword = {multi-robot systems} }DetailsAbstract

This work proposes a novel method of incorporating calls to a motion planner inside a potential field control policy for safe multi-robot navigation with uncertain dynamics. The proposed framework can handle more general scenes than the control policy and has low computational costs. Our work is robust to uncertain dynamics and quickly finds high-quality paths in scenarios generated from real-world floor plans. In the proposed approach, we attempt to follow the control policy as much as possible, and use calls to the motion planner to escape local minima. Trajectories returned from the motion planner are followed using a path-following controller guaranteeing robustness. We demonstrate the utility of our approach with experiments based on floor plans gathered from real buildings. - J. D. Hernández, M. Moll, and L. E. Kavraki, “Lazy Evaluation of Goal Specifications Guided by Motion Planning,” in Proceedings of the IEEE International Conference on Robotics and Automation, 2019, pp. 944–950.

BibTeX

@inproceedings{hernandez2019lazy-evaluation-of-goal-specifications, title = {Lazy Evaluation of Goal Specifications Guided by Motion Planning}, author = {Hern{\'a}ndez, Juan David and Moll, Mark and Kavraki, Lydia E.}, booktitle = {Proceedings of the {IEEE} International Conference on Robotics and Automation}, month = may, year = {2019}, pages = {944--950}, doi = {10.1109/ICRA.2019.8793570}, abstract = {Nowadays robotic systems are expected to share workspaces and collaborate with humans. In such collaborative environments, an important challenge is to ground or establish the correct semantic interpretation of a human request. Once such an interpretation is available, the request must be translated into robot motion commands in order to complete the desired task. Nonetheless, there are some cases in which a human request cannot be grounded to a unique interpretation, thus leading to an ambiguous request. A simple example could be to ask a robot to ``put a cup on the table,'' where multiple cups are available. In order to deal with this kind of ambiguous request, and therefore, to make the human-robot interaction easy and as seamless as possible, we propose a delayed or lazy variable grounding. Our approach uses a motion planner, which considers and determines the feasibility of the different valid groundings by representing them with goal regions. This new approach also includes a reward-penalty strategy, which attempts to prioritize those goal regions that are more promising to provide a final solution. We validate our approach by solving requests with multiple valid alternatives in both simulation and real-world experiments.}, keyword = {planning from high-level specifications, fundamentals of sampling-based motion planning} }DetailsAbstract

Nowadays robotic systems are expected to share workspaces and collaborate with humans. In such collaborative environments, an important challenge is to ground or establish the correct semantic interpretation of a human request. Once such an interpretation is available, the request must be translated into robot motion commands in order to complete the desired task. Nonetheless, there are some cases in which a human request cannot be grounded to a unique interpretation, thus leading to an ambiguous request. A simple example could be to ask a robot to “put a cup on the table,” where multiple cups are available. In order to deal with this kind of ambiguous request, and therefore, to make the human-robot interaction easy and as seamless as possible, we propose a delayed or lazy variable grounding. Our approach uses a motion planner, which considers and determines the feasibility of the different valid groundings by representing them with goal regions. This new approach also includes a reward-penalty strategy, which attempts to prioritize those goal regions that are more promising to provide a final solution. We validate our approach by solving requests with multiple valid alternatives in both simulation and real-world experiments. - K. He, A. M. Wells, L. E. Kavraki, and M. Y. Vardi, “Efficient Symbolic Reactive Synthesis for Finite-Horizon Tasks,” in Proceedings of the IEEE International Conference on Robotics and Automation, 2019, pp. 8993–8999. (Best paper award in Cognitive Robotics)

BibTeX

@inproceedings{he2019efficient-symbolic-reactive-synthesis-for-finite-horizon-tasks, title = {Efficient Symbolic Reactive Synthesis for Finite-Horizon Tasks}, author = {He, Keliang and Wells, Andrew M. and Kavraki, Lydia E. and Vardi, Moshe Y.}, booktitle = {Proceedings of the {IEEE} International Conference on Robotics and Automation}, month = may, year = {2019}, pages = {8993--8999}, doi = {10.1109/ICRA.2019.8794170}, abstract = {When humans and robots perform complex tasks together, the robot must have a strategy to choose its actions based on observed human behavior. One well-studied approach for finding such strategies is reactive synthesis. Existing ap- proaches for finite-horizon tasks have used an explicit state approach, which incurs high runtime. In this work, we present a compositional approach to perform synthesis for finite- horizon tasks based on binary decision diagrams. We show that for pick-and-place tasks, the compositional approach achieves exponential speed-ups compared to previous approaches. We demonstrate the synthesized strategy on a UR5 robot.}, keyword = {planning from high-level specifications}, note = {(Best paper award in Cognitive Robotics)} }DetailsAbstract

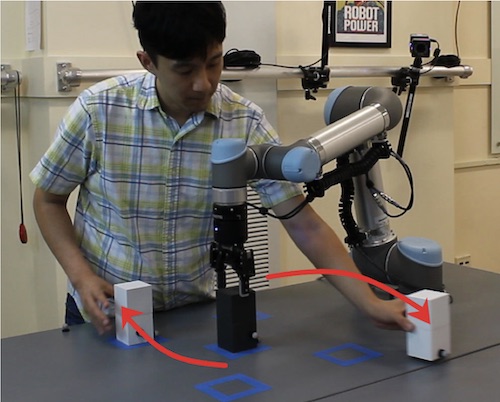

When humans and robots perform complex tasks together, the robot must have a strategy to choose its actions based on observed human behavior. One well-studied approach for finding such strategies is reactive synthesis. Existing ap- proaches for finite-horizon tasks have used an explicit state approach, which incurs high runtime. In this work, we present a compositional approach to perform synthesis for finite- horizon tasks based on binary decision diagrams. We show that for pick-and-place tasks, the compositional approach achieves exponential speed-ups compared to previous approaches. We demonstrate the synthesized strategy on a UR5 robot. - K. He, M. Lahijanian, L. E. Kavraki, and M. Y. Vardi, “Automated Abstraction of Manipulation Domains for Cost-Based Reactive Synthesis,” IEEE Robotics and Automation Letters, vol. 4, no. 2, pp. 285–292, Apr. 2019.

BibTeX

@article{he2019automated-abstraction-of-manipulation, title = {Automated Abstraction of Manipulation Domains for Cost-Based Reactive Synthesis}, author = {He, K. and Lahijanian, M. and Kavraki, L. E. and Vardi, Moshe Y.}, journal = {IEEE Robotics and Automation Letters}, month = apr, year = {2019}, volume = {4}, number = {2}, pages = {285--292}, doi = {10.1109/LRA.2018.2889191}, abstract = {When robotic manipulators perform high-level tasks in the presence of another agent, e.g., a human, they must have a strategy that considers possible interferences in order to guarantee task completion and efficient resource usage. One approach to generate such strategies is called reactive synthesis. Reactive synthesis requires an abstraction, which is a discrete structure that captures the domain in which the robot and other agents operate. Existing works discuss the construction of abstractions for mobile robots through space decomposition; however, they cannot be applied to manipulation domains due to the curse of dimensionality caused by the manipulator and the objects. In this work, we present the first algorithm for automatic abstraction construction for reactive synthesis of manipulation tasks. We focus on tasks that involve picking and placing objects with possible extensions to other types of actions. The abstraction also provides an upper bound on path-based costs for robot actions. We combine this abstraction algorithm with our reactive synthesis planner to construct correct-by-construction plans. We demonstrate the power of the framework on examples of a UR5 robot completing complex tasks in face of interferences by a human.}, keyword = {planning from high-level specifications} }DetailsAbstract

When robotic manipulators perform high-level tasks in the presence of another agent, e.g., a human, they must have a strategy that considers possible interferences in order to guarantee task completion and efficient resource usage. One approach to generate such strategies is called reactive synthesis. Reactive synthesis requires an abstraction, which is a discrete structure that captures the domain in which the robot and other agents operate. Existing works discuss the construction of abstractions for mobile robots through space decomposition; however, they cannot be applied to manipulation domains due to the curse of dimensionality caused by the manipulator and the objects. In this work, we present the first algorithm for automatic abstraction construction for reactive synthesis of manipulation tasks. We focus on tasks that involve picking and placing objects with possible extensions to other types of actions. The abstraction also provides an upper bound on path-based costs for robot actions. We combine this abstraction algorithm with our reactive synthesis planner to construct correct-by-construction plans. We demonstrate the power of the framework on examples of a UR5 robot completing complex tasks in face of interferences by a human.